Apache Spark is a general-purpose big data processing engine. It is a very powerful cluster computing framework which can run from a single cluster to thousands of clusters. It can run on clusters managed by Hadoop YARN, Apache Mesos, or by Spark’s standalone cluster manager itself. To read more on Spark Big data processing framework, visit this post “Big Data processing using Apache Spark – Introduction“. Here, in this post, we will learn how we can install Apache Spark on a local Windows Machine in a pseudo-distributed mode (managed by Spark’s standalone cluster manager) and run it using PySpark (Spark’s Python API).

Install Spark on Local Windows Machine

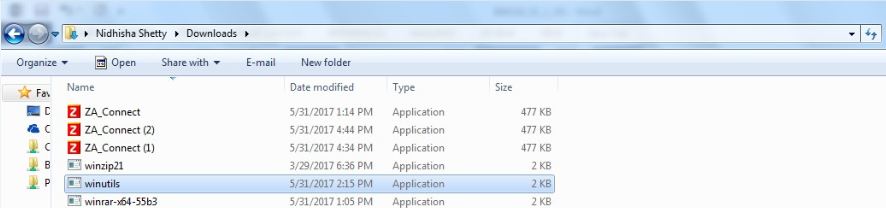

Download zip from the mentioned git link above, then unzip the downloaded file from git and then, copy the winutils.exe from the “winutils-master hadoop-2.7.1 bin” folder to C: Bigdata hadoop bin. Create Environment Variable with name 'HADOOPHOME', Advance Settings - Environment Variables - Click on New. Variable Name: HADOOPHOME. Thanks for contributing an answer to Stack Overflow! Please be sure to answer the question.Provide details and share your research! Asking for help, clarification, or responding to other answers. Download and setup winutils.exe. Go to Winutils choose your previously downloaded Hadoop version, then download the winutils.exe file by going inside 'bin'.

To install Apache Spark on a local Windows machine, we need to follow below steps:

Step 1 – Download and install Java JDK 8

Java JDK 8 is required as a prerequisite for the Apache Spark installation. We can download the JDK 8 from the Oracle official website.

JDK 8 Download

JDK 8 DownloadAs highlighted, we need to download 32 bit or 64 bit JDK 8 appropriately. Click on the link to start the download. Once the file gets downloaded, double click the executable binary file to start the installation process and then follow the on-screen instructions.

Winutils.exe Download For Windows 64 Bit

Step 2 – Download and install Apache Spark latest version

Now we need to download Spark latest build from Apache Spark’s home page. The latest available Spark version (at the time of writing) is Spark 2.4.3. The default spark package type is pre-built for Apache Hadoop 2.7 and later which works fine. Next, click on the download “spark-2.4.3-bin-hadoop2.7.tgz” to get the .tgz file.

Download Apache SparkAfter downloading the spark build, we need to unzip the zipped folder and copy the “spark-2.4.3-bin-hadoop2.7” folder to the spark installation folder, for example, C:Spark(The unzipped directory is itself a zipped directory and we need to extract the innermost unzipped directory at the installation path.).

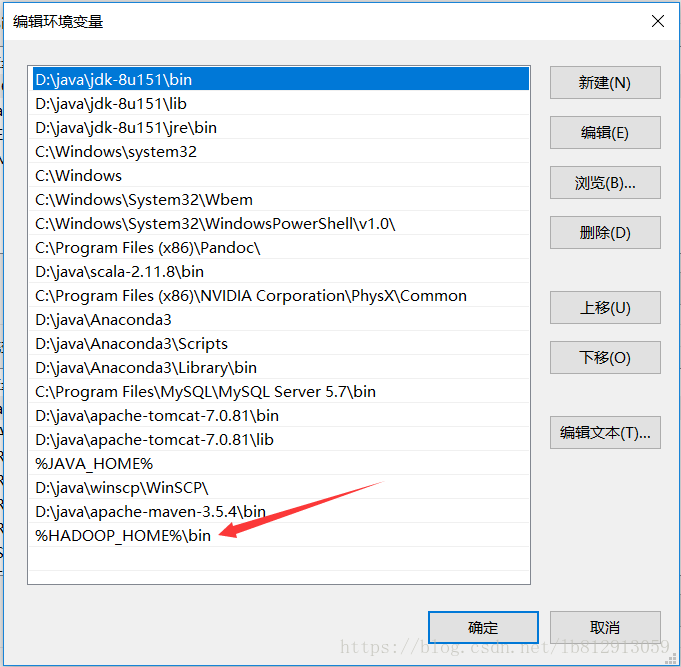

Spark installation folderStep 3- Set the environment variables

Now, we need to set few environment variables which are required in order to set up Spark on a Windows machine. Also, note that we need to replace “Program Files” with “Progra~1” and “Program Files (x86)” with “Progra~2“.

- Set SPARK_HOME = “C:Sparkspark-2.4.3-bin-hadoop2.7“

- Set HADOOP_HOME = “C:Sparkspark-2.4.3-bin-hadoop2.7“

- Set JAVA_HOME = “C:Progra~1Javajdk1.8.0_212“

Step 4 – Update existing PATH variable

- Modify PATH variable to add:

- C:Progra~1Javajdk1.8.0_212bin

- C:Sparkspark-2.4.3-bin-hadoop2.7bin

Note:We need to replace “Program Files” with “Progra~1” and “Program Files (x86)” with “Progra~2“.

Winutils.exe Download For Windows 10

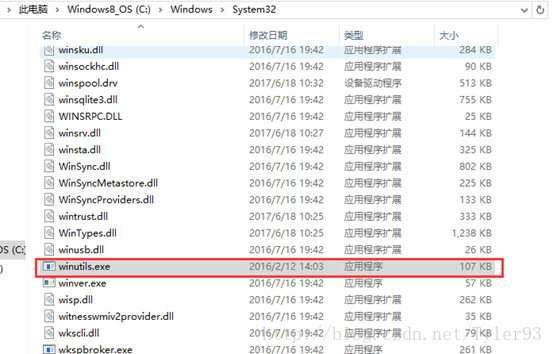

Step 5 – Download and copy winutils.exe

Next, we need to download winutils.exe binary file from this git repository “https://github.com/steveloughran/winutils“. To download this:

- Open the given git link.

- Navigate to the hadoop- 2.7.1 folder (We need to navigate to the same Hadoop version folder as the package type we have selected while downloading the Spark build).

- Go to the bin folder and download the winutils.exe binary file. This is the direct link to download winutils.exe “https://github.com/steveloughran/winutils/blob/master/hadoop-2.7.1/bin/winutils.exe” for Hadoop 2.7 and later spark build.

- Copy this file into bin folder of the spark installation folder which is “C:Sparkspark-2.4.3-bin-hadoop2.7bin” in our case.

Winutils.exe Download

Step 6 – Create hive temp folder

In order to avoid hive bugs, we need to create an empty directory at “C:tmphive“.

Step 7 – Change winutils permission

Once, we have downloaded and copied the winutils.exe at the desired path and have created the required hive folder, we need to give appropriate permissions to the winutils. In order to do so, o

Ej technologies jprofiler 11 13th.

In 802.11, the Distributed Coordination Function hoc networks is the medium access control (MAC) layer. (DCF) represents the basic access method that mobile nodes Current random access MAC protocols for ad hoc networks utilize to share the wireless channel. Mac layer protocol.

Step 8 – Download and install python latest version

Now, we are good to download and install the python latest version. Python can be downloaded from the official python website link https://www.python.org/downloads/.

Download PythonStep 9 – pip Install pyspark

Winutils For Hadoop Download

Next, we need to install pyspark package to start Spark programming using Python. To do so, we need to open the command prompt window and execute the below command:

Step 10 – Run Spark code

Now, we can use any code editor IDE or python in-built code editor (IDLE) to write and execute spark code. Below is a sample spark code written using Jupyter notebook:

Related Posts

Python use case – Export SQL table data to excel and CSV files – SQL Server 2017Read and write data to SQL Server from Spark using pyspark

Python use case – Save each worksheet as a separate excel workbook

Python use case – Save each worksheet as a separate excel workbookWinutils Exe Download

Python use case – Import zipped file without unzipping it in SSIS and SQL Server – SQL Server 2017ASP.NET Core MVC Entity Framework Web App for CRUD operationsRate ThisI’m playing with Apache Spark seriously for about a year now and it’s a wonderful piece of software. Nevertheless, while the Java motto is “Write once, run anywhere” it doesn’t really apply to Apache Spark which depend on adding an executable winutils.exe to run on Windows.That feel a bit odd but it’s fine until you need to run it on a system where adding a.exe will provoke an unsustainable delay (many months) for security reasons (time to have political leverage for a security team to probe the code).Obviously, I’m obsessed with results and not so much with issues.

Deccan herald student edition epaper sakshi. Everything is open source so the solution just laid in front of me: hacking Hadoop. An hour later the problem was fixed. Not cleanly by many standard, but fixed.

What Is Winutils

The fixI made a Github repo with a seed for a Spark / Scala program. Basically I just override 3 files from hadoop:. org.apache.hadoop.fs.RawLocalFileSystem.

org.apache.hadoop.security.Groups. org.apache.hadoop.util.ShellThe modifications themselves are quite minimal. I basically avoid locating or calling winutils.exe and return a dummy value when needed.In order to avoid useless message in your console log you can disable logging for some Hadoop classes by adding those lines below in you log4j.properties (or whatever you are using for log management) like it’s done in the seed program.# Hadoop complaining we don't have winutils.exelog4j.logger.org.apache.hadoop.util.Shell=OFFlog4j.logger.org.apache.hadoop.security.UserGroupInformation=ERRORWhile I might have missed some use cases, I tested the fix with Hive and Thrift and everything worked well. It is based on hadoop 2.6.5 which is currently used by Spark 2.4.0 package on. Is it safe?That’s all nice and well but doesn’t winutils.exe fulfill an important role, especially as we are touching something inside a package called security?Indeed, we are basically bypassing most of the right management at the filesystem level by removing winutils.exe. That wouldn’t be a great idea for a big Spark cluster with many users. But in most case, if you are running Spark on Windows it’s just for an analyst or a small team which share the same rights.

As all the input data for Spark is stored in CSV files in my case, there is no point of having an higher security in Spark.I hope the tips can help some of you. Let's stay in touch with the newsletter.

My system is throwing the following error while I tried to start the Name-node for my latest Hadoop-2.2 Version. My system did not find winutils.exe file in my Hadoop bin folder. I tried below codes to fix the issue but it hardly worked. Help me out to sort this out. I see that you are facing multiple issues on this one, I wish to help you from scratch.Download winutils.exe from the following This particular link will redirect you to GitHub and your winutils.exe must download from this.Once your WinUtils.exe is downloaded, try to set your Hadoop Home by editing your Hadoop environmental variables.You can get the sources from this following.You can download Hadoop Binaries from this followingPointing Hadoop Directory #HADOOPHOME from the external storage alone will not help. You also need to provide System Properties -Djava.library.path=pathbin to load the native libraries (DLL).I hope this must fix your issue.

Hadoop Winutils.exe 64 Bit Download

Have a glad day. May 31, 2019 by. 4,600 points.